Researchers from the Institute of Electrical and Electronics Engineers (IEEE) have developed a method to increase the authenticity of low-cost, projection-based augmented reality installations, through special glasses that cause projected 3D images to go in and out of focus in the same way that they would if the objects were real, overcoming a critical perceptual hurdle for practical usage of projection systems in controlled environments.

The IEEE system recreates depth planes for projected real and CGI imagery that will be superimposed into rooms. In this case, three CGI Stanford bunnies are being superimposed at the same depth plane as three real world objects, and their blurriness is controlled by where the viewer is looking and focusing. 3D Projectors can put footage onto fixed surfaces, moving surfaces, or even complex geometry, offering wide coverage that is difficult to recreate under the severe processing limitations of AR systems such as HoloLens. Source: https://www.youtube.com/watch?v=I8DGTQnxm38

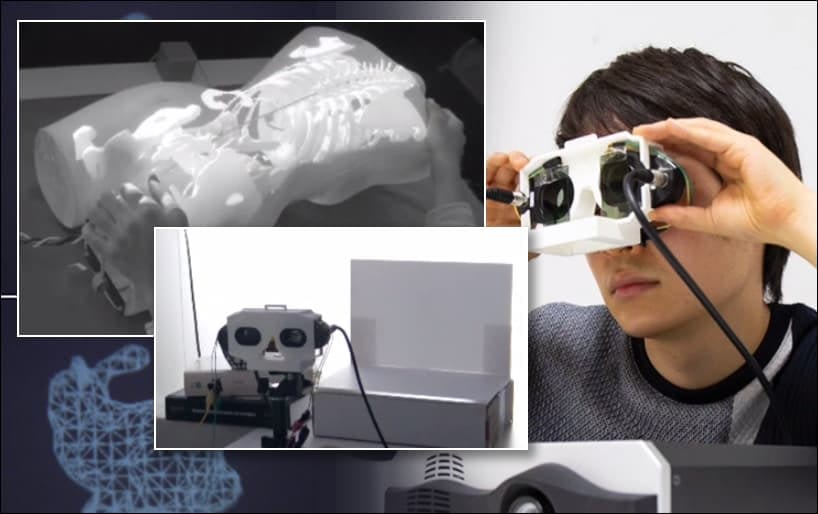

The system uses electrically focus-tunable lenses (ETL) embedded into the viewer’s glasses (which are in any case necessary to separate the two image streams into a convincing, integrated 3D experience), and which communicate with the projection system, which then automatically changes the level of blurriness of the projected image seen by the viewer.

The ETL lenses report back information about the user’s focal attention and sets the level of blurriness on a per-plane basis for the rendering of the projected geometry. Development of the system is outlined in an accompanying video, embedded at the end of this article.

The paper, titled Multifocal Stereoscopic Projection Mapping, offers a new level of usability to a field which has been limited by it’s lack of integration with the way users focus on different objects, and which promise to overcome the problems such systems have had with vergence–accommodation conflict (VAC) – a syndrome where the perceived distance between an object does not match its logical focusing distance, causing the object to ‘float’ in an unconvincingly sharp manner where it should be defocused in the context of its placement.

In AR environments, such as Microsoft’s HoloLens, foveated rendering is used to concentrate processing power, rendering detail and focus based on where the device-wearing user is looking and focusing. However, wearable AR systems such as HoloLens feature have a much higher on-board hardware load, since they actually have to deliver the 3D image to the viewer.

The Advantage of Projected Augmented Reality

By contrast, ETL-enabled glasses are simply sending focal information as an additional variable to remote CGI pipelines, which can change the focus of projected imagery faster than the round-trip that focal information has to make in a wearable AR device (i.e. focus information > sent to remote processor > rendered > sent back to wearer), improving latency, which is in itself a potential cause for viewer disorientation in AR systems.

In effect, foveated rendering is used as much to accommodate the limited available resources as it is to provide an authentic focal experience for the user, with large areas of superimposed imagery difficult to achieve in HoloLens-style systems, and limited ‘letterbox rendering’ and unstable edges a consistent complaint.

From SIGGRAPH 98 – a vision of augmented reality in an office environment, cited in the new paper. Source: https://www.youtube.com/watch?v=I8DGTQnxm38 and https://web.media.mit.edu/~raskar/UNC/Office/

The paper observes a number of known advantages that stereoscopic projection mapping (PM) has over more modern implementations of augmented reality, which rely on heavy and intense body-worn equipment, as the authors note*:

First, the field-of-view (FOV) can be made as wide as possible by increasing the number of projectors to cover the entire environment. Second, the active-shutter glasses used are normally much lighter, and, thus, their physical burden is less than HMDs. Third, multiple users can share the same AR experience if their viewpoints are sufficiently close to each other. Thanks to these advantages, researchers have found stereoscopic PM to be suitable for a wide range of applications, including but not limited to museum guides, architecture planning, product design, medical training, shape-changing interfaces, and teleconferencing.

One such implementation was devised by Microsoft Research in 2012, prior to the company’s concentration on in-device AR in recent years:

The IEEE researchers contend that the new focus input system is the first to address VAC by controlling multifocus planes, and is also the first to solve this problem in a generic and widely applicable way, without the need for expensive, specialized projecting equipment.

The focus-centered rendering pipeline devised by the researchers incorporates focal information received from the viewer’s ETL glasses at the very start of the rendering process, rather than requiring the base computer to render and then blur. Depending on implementation, this can further save processing resources and improve latency as the viewer’s focal gaze wanders around the virtual elements.

The technique is reported to work well on a variety of possible projection surfaces, including flat, non-planar (i.e. curved or complex geometry, such as dummies onto which medical x-ray imagery could be imposed) and moving surfaces.

A mixed reality mannequin that uses 3D projection, designed for a medical education environment, cited in the paper. Source: https://link.springer.com/chapter/10.1007/978-1-4614-0064-6_23

Projection systems of this type require dark environments, such as museum settings, and the ETL system reduces the viewer’s available angle of viewing, though the researchers contend that the trend towards increased aperture sizes for ETL equipment will mitigate this limitation over time. Though the authors also note that the system requires a high-speed projector in order to provide enough frames to separate into two streams, they have used an off-the-shelf, commercially available projector for their implementation.

*My conversion of inline citations to hyperlinks.

Credit: Source link