Researchers from Cornell University have designed a photo-taking robot that understands aesthetically pleasing composition. It already excels at photos for Real Estate or AirBNB, but could be trained to use its skills anywhere.

The robotic system is called AutoPhoto and was developed by Master’s student Hadi AlZayer along with Hubert Lin and Kavita Bala, two other researchers from the Cornell Ann S. Bowers College of Computing. AlZayer tells the Cornell Chronicle that the idea sprang from her desire for a companion that would take photos with better angles than could be achieved through a selfie as well as improved from what she was could get from asking a stranger.

“Whenever I asked strangers to take photos for me, I’d end up with photos that were poorly composed,” he said. “It got me thinking.”

The variables that are calculated by a human mind to create an aesthetically composed photo are complicated, but AlZayer believed it could be automated with an algorithm. After the basic algorithm was created, she would then help it refine the technique through a learning process called “reinforcement learning.”

AutoPhoto is believed to be the first robotic system to use a learned aesthetic machine learning model and represents a major development in using autonomous robots to document spaces. As explained by Engadget, its current iteration can be used to photograph interiors for Real Estate or rentals, like AirBNB.

The Robot Knows What Makes a Photo “Good”

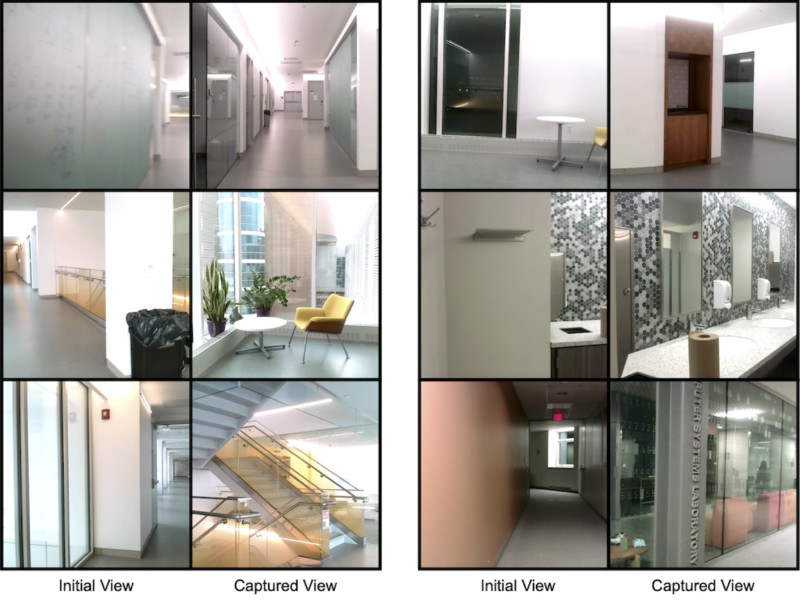

The process for how a photo is taken starts with what can only be described as bad photos. But the robot uses the AutoPhoto algorithm to continue to refine the original image and gain a better sense of its environment. The robot will go through about a dozen iterations before it has created a photo that is equal in visual quality to something seen on AirBNB or Zillow.

“You can essentially take incremental improvements to the current commands,” AlZayer tells Engadget. “You can do it one step at a time, meaning you can formulate it as a reinforcement learning problem.”

This way of learning means that the researchers do not need to teach the robot more “traditional” photography techniques like the golden rule or the rule of thirds, “rules” that many photographers will eventually disregard later in their careers anyway.

“This aesthetic model helps the robot determine if the photos it takes are good or not,” she says.

The most challenging part of developing AutoPhoto was that the team had to start entirely from scratch, as there was no existing baseline number they were trying to improve. Aesthetics are subjective, and teaching subjectivity through algorithms is particularly challenging: the team had to define the entire process and the problem.

“This kind of work is not well explored, and it’s clear there could be useful applications for it,” Hubert Lin, doctoral student in the field of computer science and co-author of the research paper, “AutoPhoto: Aesthetic Photo Capture using Reinforcement Learning,” tells the Cornell Chronicle.

That said, the researchers believe that it is just the beginning of autonomous photography that would allow robots to capture aesthetically pleasing images of dangerous or remote environments without the intervention of humans. It’s one thing to have photos of Mars, it’s another to have pretty photos of Mars. That, the research team argues, is where AutoPhoto could be hugely helpful in the future.

Image credits: Header photo licensed via Depositphotos.

Credit: Source link