Dr. Zhiliu Yang, a recent Ph.D. graduate in electrical and computer engineering at Clarkson University, along with his advisor, Dr. Chen Liu, associate professor of electrical and computer engineering presented their paper titled “TUPPer-Map: Temporal and Unified Panoptic Perception for 3D Metric-Semantic Mapping” at the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2021). The conference was held online from September 27 to October 1 this year.

The IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) is one of the largest and most impacting robotics research conferences worldwide. Established in 1988 and held annually, IROS provides an international forum for the international robotics research community to explore the frontier of science and technology in intelligent robots and smart machines.

To push autonomous driving applications and mobile robots towards the next level, metric-semantic scene understanding is one of the most critical abilities of intelligent agents. Relying on the advances in deep learning, 3D mapping techniques have been substantially improved. Meanwhile, the growing complexity of map building eagerly requires robot vision to be able to detect, segment, track and rebuild objects at a detailed instance level.

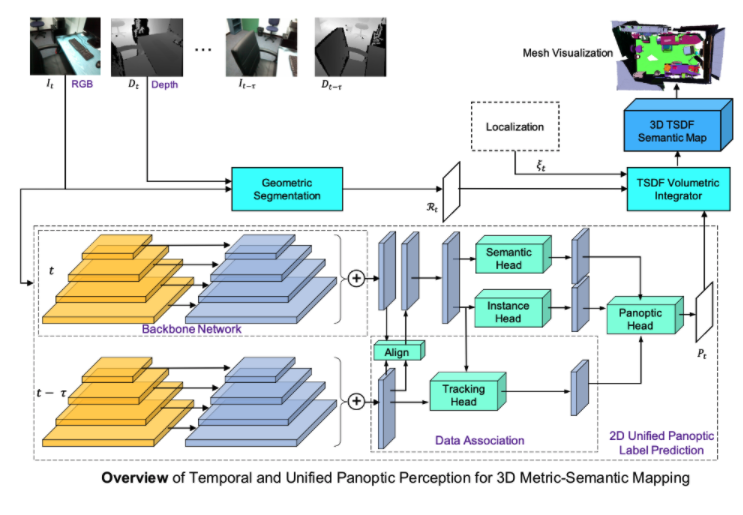

Traditionally, these perception problems are treated as separate tasks. Yang and Liu’s paper proposes TUPPer-Map, a metric-semantic mapping framework based on the unified panoptic segmentation and temporal data association. In contrast to the previous mapping methods, their framework integrates the data association stage into the holistic pixel-level segmentation stage in an end-to-end fashion, taking advantage of both intra-frame and inter-frame spatial and temporal knowledge. Firstly, they unify the two-branch instance segmentation network and semantic segmentation network into a single network by sharing the backbone net, maximizing the 2D panoptic segmentation performance. Next, they leverage geometric segmentation to refine the segments predicted via deep learning. Then, they design a novel deep learning-based data association module to track the object instances across different frames. Optical flow of consecutive frames and alignment of ROI (Region of Interest) candidates are learned to predict the frame-consistent instance label. At last, 2D semantics are integrated into 3D volume by TSDF ray-casting to build the final map. Their experimental results demonstrate the superiority of TUPPer-Map over existing semantic mapping methods. Overall, their work illustrates that using a learning-based data association strategy can enable a more unified perception network for 3D mapping.

Yang successfully defended his dissertation titled “Learning Geometry and Semantics via Deep Nets towards Global Localization and Mapping” and graduated with his Ph.D. in Electrical and Computer Engineering in Summer 2021.

Click here for a one-minute video summary of the paper.

Credit: Source link