By John P. Desmond, AI Trends Editor

Since the European Commission in April proposed rules and a legal framework in its Artificial Intelligence Act (See AI Trends, April 22, 2021), the US Congress and the Biden Administration have followed with a range of proposals that set the direction for AI regulation.

“The EC has set the tone for upcoming policy debates with this ambitious new proposal,” stated authors of an update on AI regulations from Gibson Dunn, a law firm headquartered in Los Angeles.

Unlike the comprehensive legal framework proposed by the European Union, regulatory guidelines for AI in the US are being proposed on an agency-by-agency basis. Developments include the US Innovation and Competition Act of 2021, “sweeping bipartisan R&D and science-policy regulation,” as described by Gibson Dunn, moved rapidly through the Senate.

“While there has been no major shift away from the previous “hands off” regulatory approach at the federal level, we are closely monitoring efforts by the federal government and enforcers such as the FTC to make fairness and transparency central tenets of US AI policy,” the Gibson Dunn update stated.

Many in the AI community are acknowledging the lead role being taken on AI regulation by the European Commission, and many see it as the inevitable path.

European Commission’s AI Act Seen as “Reasonable”

“Right now, every forward-thinking enterprise in the world is trying to figure out how to use AI to its advantage. They can’t afford to miss the opportunities AI presents. But they also can’t afford to be on the wrong side of the moral equation or to make mistakes that could jeopardize their business or cause harm to others,” stated Johan den Haan, CTO at Mendix, a company offering a model-driven, low-code approach for building AI systems, writing recently in Forbes.

Downside risks of AI in business include racial, gender, and other biases in AI algorithms. With humans doing the programming, algorithms are subject to human bias, limitations, and bad intentions. State actors have experimented with ways to influence population behaviors and election outcomes. “AI can enable these manipulations at mass scale,” den Haan stated.

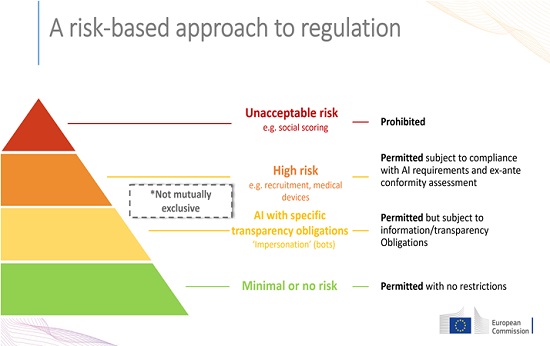

The proposed EU regulations would require high-risk AI systems to follow requirements regarding datasets, documentation, transparency and human oversight. The EU regulations would ban uses counter to fundamental human rights, including subliminal techniques. And they would ban real-time use by law enforcement of biometric identification systems, such as facial recognition, in publicly accessible space, except for specific cases of crime or terrorism.

“The proposal seems reasonable,” den Haan stated. At the same time, the EU regulations propose many uses of AI be subject to minimal transparency requirements, including chatbots and emotion recognition systems. He sees the EU’s approach as an attempt to balance protection and incentive. “It leaves plenty of room for innovation while offering critical basic protections,” den Haan stated. “Hopefully, other countries, including the US—which currently has a patchwork of AI regulations only at the local level—will follow the EU’s lead.”

Left unchecked, many believe the biases and issues around AI will cause harm and reinforce existing inequities. According to estimates from Garner, by 2022, 85% of AI projects will give inaccurate results due to bias in data, algorithms or the humans managing them, according to a recent account from Toolbox.

US AI Regulatory Environment Seen as “Complex and Unpredictable”

While it might be nice to achieve consensus on the risks of AI and how to address them, that is not happening now in the US, where in addition to proposed regulations from Congress, agency regulators are also making proposals. Five financial regulators in March issued a request for information on how financial institutions are using AI services. Shortly after, the Federal Trade Commission issued guidance on what it considers “unfair” use of AI. And the US National Institute of Standards and Technology (NIST) has proposed an approach for identifying and managing AI bias.

“These activities and more emerging on the national level, combined with efforts by individual states and cities to introduce their own laws and regulations around AI education, research, development, and use, have created an increasingly complex and unpredictable regulatory climate in the U.S.,” stated Ritu Jyoti, Group Vice President, Worldwide AI and Automation Research at IDC, market intelligence analyst firm.

Others suggest AI regulation can be a matter of degree, with more regulation required where risk is higher. “What is required is an approach to AI regulation that takes the middle ground,” stated Brandon Loudermilk, director of data science and engineering at Spiceworks Ziff Davis. “Taking a flexible, pragmatic approach to AI regulation helps society safeguard the common good in cases where the risk is greatest, while continuing to support general R&D innovation in AI by avoiding extensive regulatory efforts,’’ he stated.

Advice from HBR on How to Prepare for Coming AI Regulations

With the coming regulations beginning to take shape, companies using AI in their businesses can begin to prepare, suggests an account in the Harvard Business Review.

“Three central trends unite nearly all current and proposed laws on AI, which means that there are concrete actions companies can undertake right now to ensure their systems don’t run afoul of any existing and future laws and regulations,” stated Andrew Burt, managing partner of bnh.ai, a boutique law firm focused on AI and analytics, and chief legal officer at Immuta, a company offering universal cloud data access control.

Specifically, first conduct assessments of AI risks and document how the risks have been minimized or resolved. Regulatory frameworks refer to these types of assessments as “algorithmic impact assessments” or “IA for AI,” the author stated.

Second, have the risk of the AI be assessed by different technical personnel than those who originally developed it, or hire outside experts to conduct the assessments, to demonstrate that the evaluation is independent.

“Ensuring that clear processes create independence between the developers and those evaluating the systems for risk is a central component of nearly all new regulatory frameworks on AI,” Burt stated.

Third, put in place a system to continuously review the AI system, after the assessments and independent reviews have been conducted. “AI systems are brittle and subject to high rates of failure, AI risks inevitably grow and change over time — meaning that AI risks are never fully mitigated in practice at a single point in time,” Burt stated.

Just do all that, and your AI systems will be low-risk and safe for your company.

Read the source articles and information from Gibson Dunn, in Forbes, from Toolbox and in the Harvard Business Review.

Credit: Source link