A new research collaboration between the US and China has probed the susceptibility to deepfakes of some of the biggest face-based authentication systems in the world, and found that most of them are vulnerable to developing and emerging forms of deepfake attack.

The research conducted deepfake-based intrusions using a custom framework deployed against Facial Liveness Verification (FLV) systems that are commonly supplied by major vendors, and sold as a service to downstream clients such as airlines and insurance companies.

From the paper, an overview of the functioning of Facial Liveness Verification (FLV) APIs across major providers. Source: https://arxiv.org/pdf/2202.10673.pdf

Facial Liveness is intended to repel the use of techniques such as adversarial image attacks, the use of masks and pre-recorded video, so-called ‘master faces’, and other forms of visual ID cloning.

The study concludes that the limited number of deepfake-detection modules deployed in these systems, many of which serve millions of customers, are far from infallible, and may have been configured on deepfake techniques that are now outmoded, or may be too architecture-specific.

The authors note:

‘[Different] deepfake methods also show variations across different vendors…Without access to the technical details of the target FLV vendors, we speculate that such variations are attributed to the defense measures deployed by different vendors. For instance, certain vendors may deploy defenses against specific deepfake attacks.’

And continue:

‘[Most] FLV APIs do not use anti-deepfake detection; even for those with such defenses, their effectiveness is concerning (e.g., it may detect high-quality synthesized videos but fail to detect low-quality ones).’

The researchers observe, in this regard, that ‘authenticity’ is relative:

‘[Even] if a synthesized video is unreal to humans, it can still bypass the current anti-deepfake detection mechanism with a very high success rate.’

Above, sample deepfake images that were able to authenticate in the authors’ experiments. Below, apparently far more realistic faked images that failed authentication.

Another finding was that the current configuration of generic facial verification systems are biased towards white males. Subsequently, female and non-white identities were found to be more effective in bypassing verification systems, putting customers in those categories at greater risk of breach via deepfake-based techniques.

The report finds that white male identities are most rigorously and accurately assessed by the popular facial liveness verification APIs. In the table above, we see female and non-white identities can be more easily used to bypass the systems.

The paper observes that ‘there are biases in [Facial Liveness Verification], which may bring significant security risks to a particular group of people.’

The authors also conducted ethical facial authentication attacks against a Chinese government, a major Chinese airline, one of the biggest life insurance companies in China, and R360, one of the largest unicorn investment groups in the world, and report success in bypassing these organizations’ downstream use of the studied APIs.

In the case of a successful authentication bypass for the Chinese airline, the downstream API required the user to ‘shake their head’ as a proof against potential deepfake material, but this proved not to work against the framework devised by the researchers, which incorporates six deepfake architectures.

Despite the airline’s evaluation of a user’s head-shake, deepfake content was able to pass the test.

The paper notes that the authors contacted the vendors involved, who have reportedly acknowledged the work.

The authors offer a slate of recommendations for improvements in the current state of the art in FLV, including the abandoning of single-image authentication (‘Image-based FLV’), where authentication is based on a single frame from a customer’s camera feed; a more flexible and comprehensive updating of deepfake detection systems across image and voice domains; imposing the need that voice-based authentication in user video be synchronized with lip movements (which they are not now, in general); and requiring users to perform gestures and movements which are currently difficult for deepfake systems to reproduce (for instance, profile views and partial obfuscation of the face).

The paper is titled Seeing is Living? Rethinking the Security of Facial Liveness Verification in the Deepfake Era, and comes from joint lead authors Changjiang Li and Li Wang, and five other authors from Pennsylvania State University, Zhejiang University, and Shandong University.

The Core Targets

The researchers targeted the ‘six most representative’ Facial Liveness Verification (FLV) vendors, which have been anonymized with cryptonyms in the research.

The vendors are represented thus: ‘BD’ and ‘TC’ represent a conglomerate supplier with the largest number of face-related API calls, and the biggest share of China’s AI cloud services; ‘HW’ is ‘one of the vendors with the largest [Chinese] public cloud market’; ‘CW’ has the fastest growth rate in computer vision, and is attaining a leading market position’; ‘ST’ is among the biggest computer vision vendors; and ‘iFT’ numbers among the largest AI software vendors in China.

Data and Architecture

The underlying data powering the project includes a dataset of 625,537 images from the Chinese initiative CelebA-Spoof, together with live videos from Michigan State University’s 2019 SiW-M dataset.

All the experiments were conducted on a server featuring twin 2.40GHz Intel Xeon E5-2640 v4 CPUs running on 256 GB of RAM with a 4TB HDD, and four orchestrated 1080Ti NVIDIA GPUs, for a total of 44GB of operative VRAM.

Six in One

The framework devised by the paper’s authors is called LiveBugger, and incorporates six state-of-the-art deepfake frameworks ranged against the four chief defenses in FLV systems.

LiveBugger contains diverse deepfake approaches, and centers on the four main attack vectors in FLV systems.

The six deepfake frameworks utilized are: Oxford University’s 2018 X2Face; the US academic collaboration ICface; two variations of the 2019 Israeli project FSGAN; the Italian First Order Method Model (FOMM), from early 2020; and Peking University’s Microsoft Research collaboration FaceShifter (though since FaceShifter is not open source, the authors had to reconstruct it based on the published architecture details).

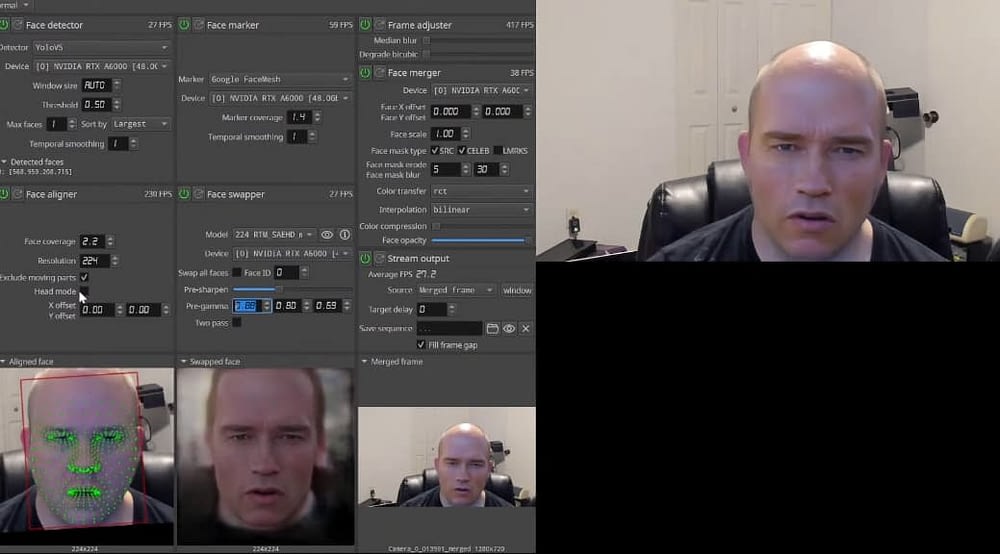

Methods employed among these frameworks included the use of pre-rendered video in which the subjects of the spoof video perform rote actions that have been extracted from the API authentication requirements in an earlier evaluation module of LiveBugger, and also the use of effective ‘deepfake puppetry’, which translates the live movements of an individual into a deepfaked stream that has been inserted into a co-opted webcam stream.

An example of the latter is DeepFaceLive, which debuted last summer as an adjunct program to the popular DeepFaceLab, to enable real-time deepfake streaming, but which is not included in the authors’ research.

Attacking the Four Vectors

The four attack vectors within a typical FLV system are: image-based FLV, which employs a single user-provided photo as an authentication token against a facial ID that’s on record with the system; silence-based FLV, which requires that the user upload a video clip of themselves; action-based FLV, which requires the user to perform actions dictated by the platform; and voice-based FLV, which matches a user’s prompted speech against the system’s database entry for that user’s speech pattern.

The first challenge for the system is establishing the extent to which an API will disclose its requirements, since they can then be anticipated and catered to in the deepfaking process. This is handled by the Intelligence Engine in LiveBugger, which gathers information on requirements from publicly available API documentation and other sources.

Since the published requirements may be absent (for various reasons) from the API’s actual routines, the Intelligence Engine incorporates a probe that gathers implicit information based on the results of exploratory API calls. In the research project, this was facilitated by official offline ‘test’ APIs provided for the benefit of developers, and also by volunteers who offered to use their own live accounts for testing.

The Intelligence Engine searches for evidence regarding whether an API is currently using a particular approach that could be useful in attacks. Features of this kind can include coherence detection, which checks whether the frames in a video are temporally continuous – a requirement which can be established by sending scrambled video frames and observing whether this contributes to authentication failure.

The module also searches for Lip Language Detection, where the API might check to see if the sound in the video is synchronized to the user’s lip movements (rarely the case – see ‘Results’ below).

Results

The authors found that all six evaluated APIs did not use coherence detection at the time of the experiments, allowing the deepfaker engine in LiveBugger to simply stitch together synthesized audio with deepfaked video, based on contributed material from volunteers.

However, some downstream applications (i.e. customers of the API frameworks) were found to have added coherence detection to the process, necessitating the pre-recording of a video tailored to circumvent this.

Additionally, only a few of the API vendors use lip language detection; for most of them, the video and audio are analyzed as separate quantities, and there is no functionality that attempts to match the lip movement to the provided audio.

Diverse results spanning the range of fake techniques available in LiveBugger against the varied array of attack vectors in FLV APIs. Higher numbers indicate a greater rate of success in penetrating FLV using deepfake techniques. Not all APIs include all the possible defenses for FLV; for instance, several do not offer any defense against deepfakes, while others do not check that lip movement and audio match up in user-submitted video during authentication.

Conclusion

The paper’s results and indications for the future of FLV APIs are labyrinthine, and the authors have concatenated them into a functional ‘architecture of vulnerabilities’ that could help FLV developers better understand some of the issues uncovered”

The paper’s network of recommendations regarding the existing and potential susceptibility of face-based video identification routines to deepfake attack.

The recommendations note:

‘The security risks of FLV widely exist in many real-world applications, and thus threaten the security of millions of end-users’

The authors also observe that the use of action-based FLV is ‘marginal’, and that increasing the number of actions that users are required to perform ‘cannot bring any security gain’.

Further, the authors note that combining voice recognition and temporal face recognition (in video) is a fruitless defense unless the API providers begin to demand that lip movements are synced to audio.

The paper comes in the light of a recent FBI warning to business of the dangers of deepfake fraud, nearly a year after their augury of the technology’s use in foreign influence operations, and of general fears that live deepfake technology will facilitate a novel crime wave on a public that still trusts video authentication security architectures.

These are still the early days of deepfake as an authentication attack surface; in 2020, $35 million dollars was fraudulently extracted from a bank in UAE by use of deepfake audio technology, and a UK executive was likewise scammed into disbursing $243,000 in 2019.

First published 23rd February 2022.

Credit: Source link