Facebook and its new parent company Meta have been making headlines as of late as it continues to try and recover from the backlash incurred from the leaked internal documents from a former employee. As Meta moves forward into creating Zuckerberg’s vision of the metaverse, it is looking at new ways it can advance technology and people’s minds past its most recent woes. This includes the recent removal by Meta of its Facial Recognition system on Facebook.

The latest announcement emerging from Meta is in the combined areas of artificial intelligence (AI), robotics, and tactile sensing. As robots become more of a reality in the sense of them being able to communicate and be capable of more detailed and complicated programming, Meta wants them to also be able to communicate as humans do through touch. It outlines this endeavor as having four pillars of the touch-sensing ecosystem that include developing hardware, simulators, libraries, benchmarks, and data sets. Meta believes these pillars are necessary for building AI systems that can both understand and interact through touch.

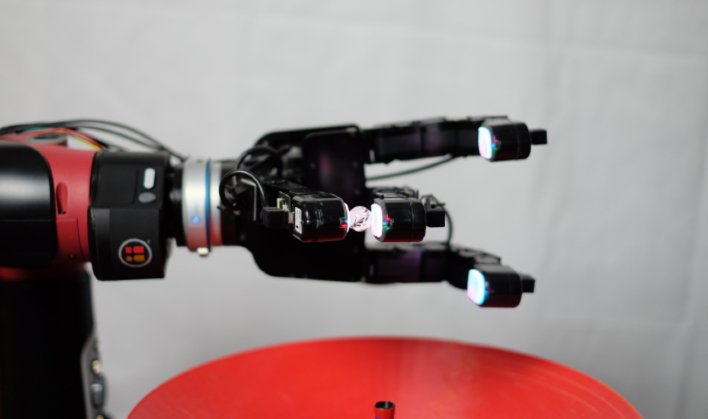

One of the areas included within the pillars is tactile sensing. This field in robotics is researching ways that robots can both understand and replicate human-level touch within the physical world. As this technology progresses, the hope is that it can be a standalone technology as well as be integrated with audio and visual technologies. The end result should lead to safer and kinder robots, according to Meta.

This first step in furthering the advancement of tactile sensing is to develop sensors that can collect and enable the processing of said data. Meta believes that the sensor should be small enough to mimic the fingertip of a human, which it admits is too costly for most of the academic community to fund. Next, these fingertip size sensors need to be durable and able to withstand the repeated tasks that it would likely be asked to carry out. Finally they need to be sensitive enough to collect enough data about surface texture and features, contact force and other ways a fingertip discerns exactly what it is feeling.

Meta is looking to use the full open source design of DIGIT it released in 2020. This design is cheaper to manufacture and provides hundreds of thousands more contact points than other available commercial tactile sensors currently on the market according to Meta. Meta AI has partnered with GelSight to commercially manufacture DIGIT to make it more affordable to researchers. Meta AI also has agreed to collaborate with Carnegie-Mellon-University to develop ReSkin, an open source touch-sensing “skin” with a small form factor.

TACTO is another open source technology developed by Meta AI. This technology is used for high-resolution vision-based tactile sensors to provide support to Machine Learning (ML). The use cases for this would be for when hardware is not present, which creates a platform that would bypass and negate the need for real world experiments. This could save a lot of time and money for researchers as it would lessen the wear and tear on the hardware that is being used and ensure the right hardware is being used.

Oier Mees, a doctoral research assistant in autonomous intelligent systems at the Institute of Computer Science at the University of Freiberg, says, “TACTO and DIGIT have democratized access to vision-based tactile sensing by providing low-cost reference implementations which enabled me to quickly prototype multimodal robot manipulation policies.”

In an attempt to reduce deployment time and increase the reuse of code, Meta created a library of ML models and functionality called PyTouch. The goal is to eventually be able to integrate both real-world sensors and its tactile-sensing simulator along with Sim2Real capabilities. Researchers will be able to use PyTouch “as a service” and have the ability to connect their DIGIT, download a pretrained model, and use it as a building block in their own application.

We are only at the precipice of understanding and developing AI across many areas of research. The opportunities that both AI and ML present can produce feelings of both exhilaration and fear. Meta’s advancements in these areas can produce those same feelings as we await to see where it intends to take and use it in the future. While we can joke about how Meta is simply creating technology to make Mark Zuckerberg more human, we should also keep a watchful eye filled with amazement on all the companies researching and producing these technologies.

Credit: Source link